ScrewSplat: Integrating Screw Model with 3D Gaussians

Core Components of ScrewSplat

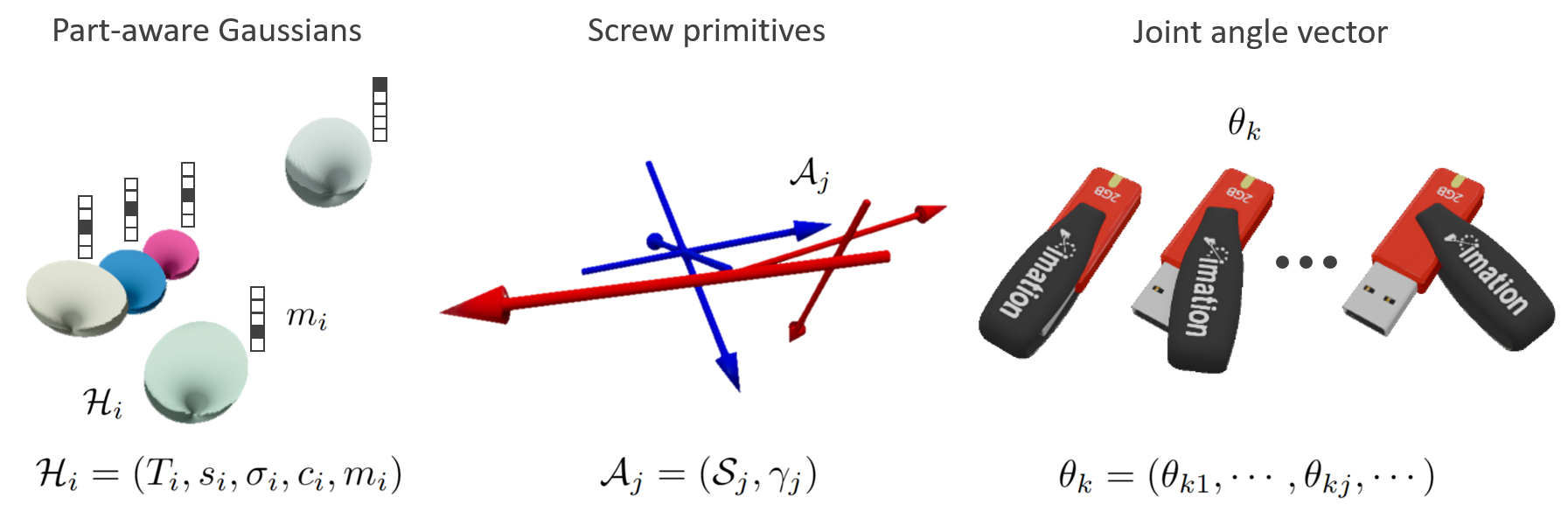

First, we splat screw primitives, where the \( j \)th screw primitive \( \mathcal{A}_j \) is parametrized by a tuple \( (\mathcal{S}_j, \gamma_j) \), with \( \mathcal{S}_j \) representing a screw axis and \( \gamma_j \in [0, 1] \) denoting the confidence.

Next, we splat part-aware Gaussian primitives, where the \( i \)th primitive \( \mathcal{H}_i \) is parametrized by an augmented tuple \( (T_i, s_i, \sigma_i, c_i, m_i) \). The paremeters \( (T_i, s_i, \sigma_i, c_i) \) are identical to those of Gaussians in standard Gaussian splatting. Here, \( m_i = (m_{i0}, \cdots, m_{ij}, \cdots) \) represents a probability simplex over the parts defined by screw primitives. Specifically, \( m_{i0} \) denotes the probability that the Gaussian belongs to the static base part, while \( m_{ij} \) for \( j \geq 1 \) denotes the probability that the Gaussian is associated with the part whose motion is governed by the \( j \)th screw primitive \( \mathcal{A}_j \).

Lastly, we assign a joint angle vector \( \theta_k = (\theta_{k1}, \cdots, \theta_{kj}, \cdots) \) to the RGB observations corresponding to the \( k \)th configuration of the articulated object. Specifically, \( \theta_{kj} \) denotes the joint angle associated with the \( j \)th screw primitive \( \mathcal{A}_j \) in the \( k \)th configuration.

RGB Rendering Procedure with ScrewSplat

The key idea behind the RGB rendering procedure is to replicate Gaussians from each part-aware Gaussian primitive and assign each replica to either the static base or one of the movable parts. Specifically, we construct Gaussians \( \mathcal{G}_{ij} \) from the \( i \) th part-aware Gaussian primitive \( \mathcal{H}_i \). Each Gaussian \(\mathcal{G}_{ij}\) is assigned to the base part if \( j = 0 \), and to the movable part associated with the screw primitive \( \mathcal{A}_j \) if \(j \geq 1\).

Loss Function for Optimizing ScrewSplat

The part-aware Gaussian primitives, screw primitives, and joint angles are jointly optimized to minimize the following loss function: $$ \begin{equation*} \mathcal{L} = \mathcal{L}_{\text{render}} + \beta \sum_{j} \sqrt{\gamma_j}, \end{equation*} $$ where \( \mathcal{L}_{\text{render}} \) is the RGB rendering loss. The second term serves as a regularization term -- referred to as the parsimony loss -- which encourages ScrewSplat to represent articulated objects using the smallest possible number of screw primitives. This term not only pushes the model to select a minimal set of screws, but also promotes the identification of the most reliable ones.